Real-Time Data Processing with Kafka and Node.js

Efficient Data Pipelines Made Simple with Kafka + Nodejs

Hello everyone! I'm Kiran Kuyate, and in this article, we'll explore how Apache Kafka and Node.js combine to create efficient real-time data pipelines

In today's fast-paced world, real-time data processing has become a crucial aspect of many applications. Whether it's monitoring user activity, analyzing sensor data, or processing logs, the ability to handle data streams in real time is essential. Kafka, a distributed event streaming platform, provides a robust solution for building real-time data pipelines. In this article, we'll explore how to leverage Kafka with Node.js to create scalable and resilient real-time data processing systems, with a specific focus on incorporating live location sharing for delivery drivers as an example use case.

What is Kafka?

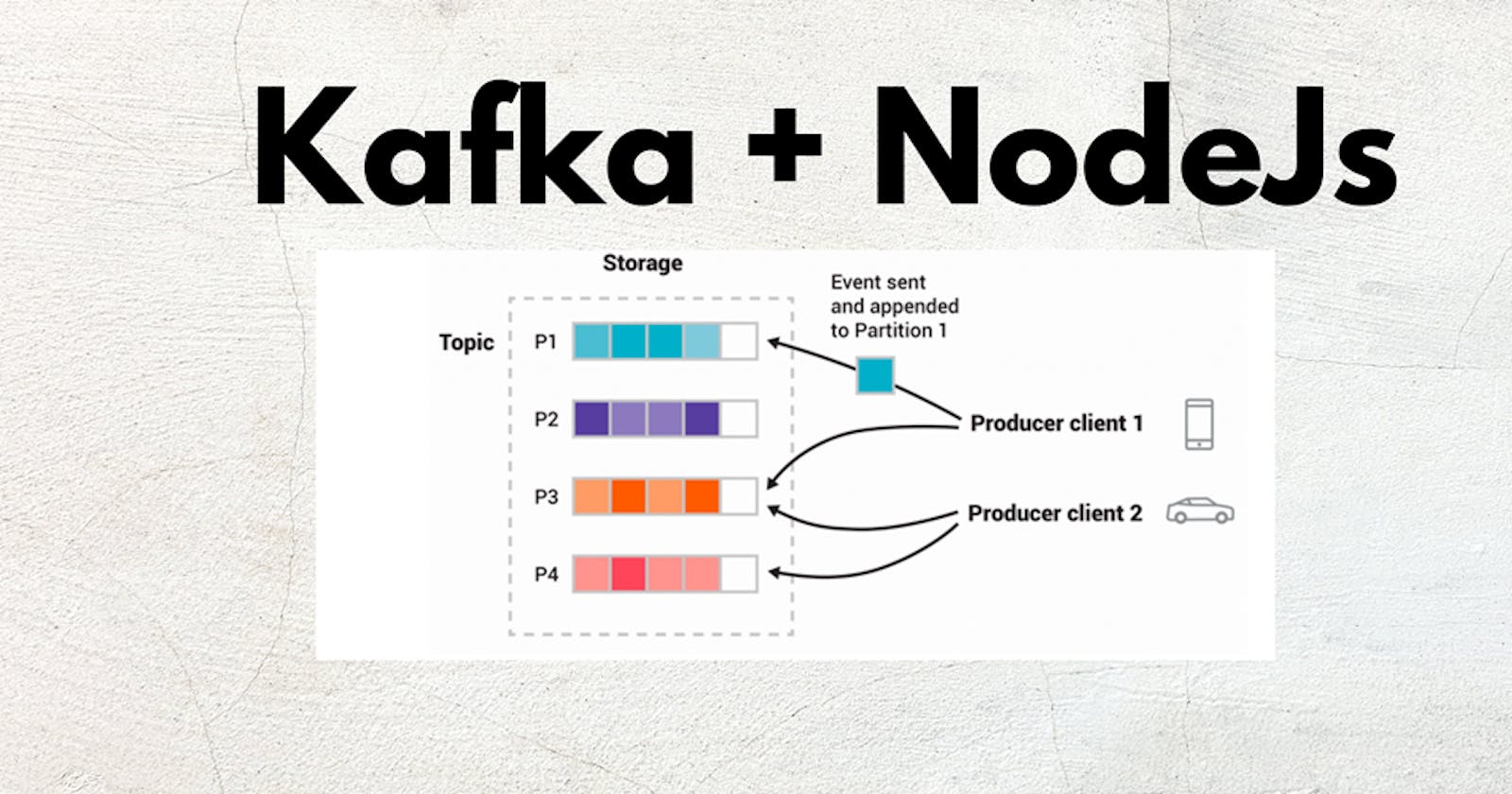

Apache Kafka is an open-source distributed event streaming platform used for building real-time data pipelines and streaming applications. It is designed to handle high throughput and provides features such as fault tolerance, scalability, and durability. In DB there is low throughput and Kafka runs entirely on RAM for storing data temporarily. Kafka uses a publish-subscribe model, where producers publish messages to topics, and consumers subscribe to these topics to receive messages.

Let's understand via Example:

Live Location Sharing for Delivery Drivers Let's consider a scenario where a logistics company wants to track the real-time location of its delivery drivers using Kafka and Node.js. Each delivery driver's mobile device sends their location updates periodically to a Kafka topic named "driver-locations." The company's backend system, acting as a consumer, subscribes to this topic to process and visualize the live location data on a map for monitoring purposes.

Kafka and Node.js :

Producer: The mobile app installed on each delivery driver's device acts as a Kafka producer. It periodically sends the driver's location updates (latitude and longitude) to the "driver-locations" Kafka topic.

Kafka Cluster: The Kafka cluster, comprised of brokers, partitions, and topics, handles the ingestion and distribution of the location data. It ensures fault tolerance and scalability.

Consumer: The backend system of the logistics company acts as a Kafka consumer. It subscribes to the "driver-locations" topic to receive location updates in real time. The consumer processes these updates and updates the database with the latest location information for each driver.

Visualization: The logistics company's monitoring dashboard utilizes the updated location data from the database to display the live locations of all delivery drivers on a map interface. This allows the company to track the drivers' movements in real time and optimize route planning and resource allocation.

By leveraging Kafka with Node.js, the logistics company can efficiently handle the high volume of location data generated by its fleet of delivery drivers. The system is scalable, fault-tolerant, and capable of processing real-time data streams, making it ideal for building robust real-time location tracking applications.

Apache Kafka is an open-source distributed event streaming platform used for building real-time data pipelines and streaming applications. It's designed to handle high volumes of data streams, providing features like fault tolerance, scalability, and durability.

To get started with Apache Kafka, set up a development environment :

Prerequisites:

Knowledge:

Intermediate level understanding of Node.js.

Experience with designing distributed systems.

Tools:

Node.js: Download and install Node.js from the official website.

Docker: Download and install Docker to manage containers.

VSCode: Download and install Visual Studio Code for code editing.

Commands:

- Start Zookeeper Container and expose PORT 2181:

docker run -p 2181:2181 zookeeper

- This command starts a Zookeeper container, which is required for Kafka to manage coordination between different Kafka brokers (servers).

- Start Kafka Container, expose PORT 9092, and set up environment variables:

docker run -p 9092:9092 \

-e KAFKA_ZOOKEEPER_CONNECT=<PRIVATE_IP>:2181 \

-e KAFKA_ADVERTISED_LISTENERS=PLAINTEXT://<PRIVATE_IP>:9092 \

-e KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR=1 \

confluentinc/cp-kafka

Details of commands:

-p 9092:9092: Exposes port 9092, which is the default port for Kafka.-e KAFKA_ZOOKEEPER_CONNECT=<PRIVATE_IP>:2181: Specifies the Zookeeper connection string (PRIVATE_IP: Network Address,WIFI...)-e KAFKA_ADVERTISED_LISTENERS=PLAINTEXT://<PRIVATE_IP>:9092: Specifies the advertised listener that clients will use to connect to Kafka.-e KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR=1: Sets the replication factor for the offsets topic to 1, ensuring fault tolerance.

Once you've executed these commands, your Kafka setup should be running, and you can start developing applications that leverage Kafka for real-time data processing.

Now Let's see the complete setup for the Node.js code, covering each component (client, admin, producer, and consumer). Then, we will understand its functionality by code vis.

Setting Up the Node.js Environment

Create a new directory for your project and navigate into it.

Initialize a new Node.js project using the following command:

npm init -yInstall the

kafkajspackage:npm install kafkajs

Writing the Nodejs Code

1. client.js

This file sets up the Kafka client using the kafkajs package.

// client.js

const { Kafka } = require('kafkajs');

const kafka = new Kafka({

clientId: 'kafka-app', //add your app name any

brokers: ['localhost:9092'] // Private IP

});

module.exports = { kafka };

// we will reusing this where kafka reqiured

2. admin.js

we initialize an admin client to manage Kafka topics. We can create a topic named "driver-updates" with two partitions you can create more depending on unCase.

// admin.js

const { kafka } = require('./client');

async function init() {

const admin = kafka.admin();

console.log('Admin Connecting....');

await admin.connect();

console.log('Admin Connected!');

console.log('Creating topics...');

await admin.createTopics({

topics: [{ topic: 'driver-updates', numPartitions: 2 }]

});

console.log('Topic Creation Successful [driver-updates]');

console.log('Disconnecting Admin...');

await admin.disconnect();

}

init();

3. producer.js

This script allows you to produce messages to the "driver-updates" topic. Messages include the rider's name and location, with partitioning based on location and similarly in real application use you connect it with your data for updating.

// producer.js

const { kafka } = require('./client');

const readline = require('readline');

const rl = readline.createInterface({

input: process.stdin,

output: process.stdout

});

async function init() {

const producer = kafka.producer();

console.log('Connecting Producer....');

await producer.connect();

console.log('Producer Connected!');

rl.setPrompt('>> ');

rl.prompt();

rl.on('line', async (line) => {

const [rider, location] = line.split(' ');

await producer.send({

topic: 'driver-updates',

messages: [

{

partition: location.toLowerCase() === 'n' ? 0 : 1,

key: 'location-update',

value: JSON.stringify({ name: rider, loc: location })

}

]

});

}).on('close', async () => {

await producer.disconnect();

console.log('Producer Disconnected!');

});

}

init();

useCase: In our food delivery service example for KwayFood, the producer plays a crucial role in the real-time location tracking system. Each delivery rider's smartphone, like Kiran or Shruti's, acts as a Kafka producer. Imagine, as Kiran embarks on his delivery shift for KwayFood, his smartphone periodically sends updates containing his current location to Kafka. These updates, along with Shruti's movements, are serialized into messages and published to a Kafka topic named "rider-locations."

For instance, as Kiran delivers orders around the city, his smartphone continuously sends his GPS coordinates to Kafka. Each time Kiran's location changes, a new message is produced and sent to the "rider-locations" topic. Similarly, Shruti's smartphone does the same as she navigates through the city to deliver orders.

This real-time tracking capability is crucial for KwayFood's operations, enabling timely delivery.

4. consumer.js

Consumer scripts subscribe to the "driver-updates" topic and receive messages in real time. Multiple consumer groups demonstrate parallel message processing.

// consumer.js

const { kafka } = require('./client');

const group = process.argv[2];

async function init() {

const consumer = kafka.consumer({ groupId: group });

await consumer.connect();

await consumer.subscribe({ topics: ['driver-updates'], fromBeginning: true });

await consumer.run({

eachMessage: async ({ topic, partition, message }) => {

console.log(

`${group} --> [${topic}]: Part:${partition}: ${message.value.toString()}`

);

}

});

}

init();

In the world of Apache Kafka, a consumer is like a subscriber to a newspaper. It's a part of the system that reads or "consumes" messages from Kafka topics.

In our KwayFood story, think of the consumer as a hungry customer eagerly waiting for their food delivery. As Kiran and Shruti, the delivery riders, make their way through the city, their location updates are like delicious meals being prepared in the KwayFood kitchen.

The consumer, represented by KwayFood's server, eagerly waits for these updates to arrive, just like the hungry customer waiting for their meal. When a location update message arrives in Kafka, the consumer quickly grabs it and processes it, just as the customer happily receives their awaited meal.

Note: Apache Kafka consumers can be categorized into two types: group consumers, which share message processing within a consumer group, and non-group consumers, which process messages independently.

Step 3: Running the Application

To run the application:

Open four terminal windows.

Run

node admin.jsin the first window to create the "driver-updates" topic.In the second window, execute

node consumer.js group1to start Consumer Group 1.In the third window, execute

node consumer.js group2to start Consumer Group 2.In the fourth window, run

node producer.jsto start the producer. Enter the rider's name and location in the prompted terminal. If the location is "Nashik," it will be processed by Consumer Group 1; otherwise, it will be handled by Consumer Group 2.

In this setup, the producer generates messages based on rider locations, while consumers process them according to their specific requirements in real time. You can use this template code as a foundation to integrate Kafka into your NodeJs application + ref video.

If you have any feedback or suggestions, feel free to share them.

Thanks 💖🙌🏻